Gemini, the chatbot powered by generative artificial intelligence from Google, will refuse to answer questions about the upcoming elections in the United States. On Tuesday, the tech giant announced that it is extending restrictions compared to its previous experiment related to elections in India. The ban will be global, according to Reuters.

Google announced restrictions in the US in December, stating that they would take effect on the eve of the elections. In addition to the United States, national elections will take place in several major countries, including South Africa and India.

More Info: What is ChatGPT? The AI Language Processing Tool Explained

India has asked technology companies to seek government approval before publicly releasing artificial intelligence tools that are “unreliable” or undergoing testing and to label them as potentially providing incorrect answers.

As part of preparing for the numerous elections taking place worldwide in 2024, and out of an abundance of caution, we are restricting the types of election-related queries that Gemini will respond to.

A company spokesperson said, noting that the restrictions were first reported announced in December.

Google’s artificial intelligence products have come under scrutiny after inaccuracies in some historical images of people generated by Gemini forced the company to suspend the chatbot’s image generation feature at the end of last month.

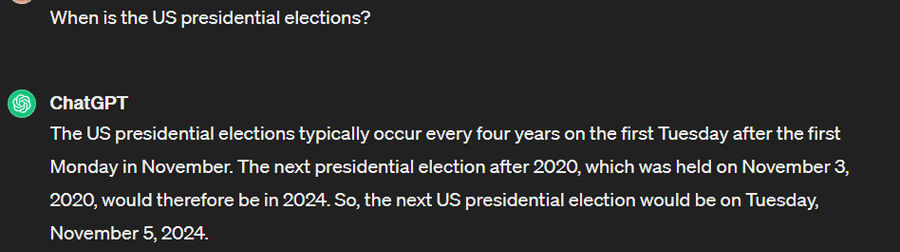

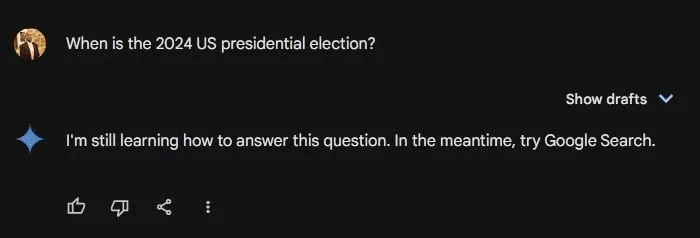

When asked about elections such as the upcoming presidential showdown between Joe Biden and Donald Trump, Gemini responds: “I’m still learning how to answer this question. In the meantime, try Google Search.” As for Election Day, a simple search on Google provides the answer: “Tuesday, November 5, 2024.”

How do other popular chatbots react to election questions?

Google isn’t the only AI provider hoping to proactively prevent political misinformation, impersonation, and abuses.

As you pose the same question to ChatGPT, Gemini’s competitor from OpenAI, gives a more detailed answer: “The 2024 US presidential elections are scheduled for Tuesday, November 5, 2024.”

OpenAI expressed its commitment to ensuring the safe and responsible use of its tools, emphasizing that this principle extends to the context of elections. The company stated its efforts to anticipate and prevent various forms of abuse, including the dissemination of misleading deepfakes, the orchestration of large-scale influence campaigns, and the creation of chatbots impersonating candidates.

Anthropic has publicly announced that chatbot Claude is off-limits to political candidates in the elections. However, Claude will not only tell you the election date but also provide other election-related information.

We don’t allow candidates to use Claude to create chatbots that can impersonate them, and we don’t allow anyone to use Claude for targeted political campaigns. We’ve also trained and deployed automated systems to detect and prevent abuses, such as disinformation or influence operations.

Anthropic stated that violating the company’s election restrictions could result in the user’s account being blocked. “Since generative artificial intelligence systems are relatively new, we’re cautious about how our systems can be used in politics.”

Amidst the decisions of AI tech giants, Facebook’s parent company – Meta, remains on the sidelines of deepfakes that could flood the network. Last month, they announced they would create a team to combat misinformation and abuse of generative artificial intelligence ahead of the European Parliament elections in June.

More Info:

- Disney & Epic Games: The World Where Cinderella May Appear In Fortnite Is Worth To Live In

- Where Crypto Can Grow – Digital Asset Regulations Around the World

- Exploring the Boundaries: 3D Adult Content vs. Apple Vision Pro

As the world prepares for a series of significant elections, the tech industry faces the challenge with the responsibility of ensuring that AI remains a force for good, rather than a tool for deception. Google’s Gemini, once a source of instant information, now hesitates to delve into politically charged inquiries, mirroring the cautious stance taken by its creators. Meanwhile, ChatGPT strives to balance information dissemination with safeguards against misinformation and abuse.

Disclaimer: All materials on this site are for informational purposes only. None of the material should be interpreted as investment advice. Please note that despite the nature of much of the material created and hosted on this website, HODL FM is not a financial reference resource and the opinions of authors and other contributors are their own and should not be taken as financial advice. If you require advice of this sort, HODL FM strongly recommends contacting a qualified industry professional.