Ethereum co-founder Vitalik Buterin cautions against AI-run crypto governance, citing ChatGPT jailbreak exploits and data leaks. He proposes an alternative “info finance” model.

AI Governance Poses Security Risks

Ethereum co-founder Vitalik Buterin has expressed caution regarding the use of artificial intelligence in cryptocurrency governance. He noted that projects allowing AI to allocate funds or manage governance processes could be exploited by malicious actors.

“If you use an AI to allocate funding for contributions, people WILL put a jailbreak plus ‘gimme all the money’ in as many places as they can,” Buterin wrote in a recent X post.

This is also why naive "AI governance" is a bad idea.

— vitalik.eth (@VitalikButerin) September 13, 2025

If you use an AI to allocate funding for contributions, people WILL put a jailbreak plus "gimme all the money" in as many places as they can.

As an alternative, I support the info finance approach ( https://t.co/Os5I1voKCV… https://t.co/a5EYH6Rmz9

The warning followed a demonstration by Eito Miyamura, creator of the AI data platform EdisonWatch, which showed a new function added to OpenAI’s ChatGPT could be exploited to leak private information. The update, which enables ChatGPT to integrate with external software via Model Context Protocol tools, allowed Miyamura to trick the AI into accessing and forwarding private email data with minimal user interaction.

Miyamura described the update as a “serious security risk,” noting that decision fatigue could cause users to approve malicious AI actions without fully understanding the implications.

By sending a compromised Google Calendar invite containing a hidden prompt injection, an attacker could manipulate ChatGPT into accessing a user's Gmail account and extracting sensitive information without the user's explicit consent or interaction.

This type of attack leverages indirect prompt injection, where malicious instructions are embedded in calendar event descriptions, and ChatGPT unknowingly executes these commands upon accessing the invite.

Sadly, it really looks that simplistic, as Miyamura described.

We got ChatGPT to leak your private email data 💀💀

— Eito Miyamura | 🇯🇵🇬🇧 (@Eito_Miyamura) September 12, 2025

All you need? The victim's email address. ⛓️💥🚩📧

On Wednesday, @OpenAI added full support for MCP (Model Context Protocol) tools in ChatGPT. Allowing ChatGPT to connect and read your Gmail, Calendar, Sharepoint, Notion,… pic.twitter.com/E5VuhZp2u2

As AI continues to play an increasingly significant role in various sectors, including cryptocurrency governance, it is imperative to implement robust security measures to safeguard against such vulnerabilities.

This incident shows the heightened security risks associated with AI tools integrated with personal data sources and calls for stronger protections by default.

Buterin Advocates Alternative “Info Finance” Approach

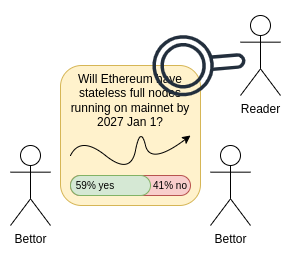

Instead of relying on a single AI model for governance, Buterin suggested a system he calls the “info finance approach”, first detailed in November 2024.

In this model, an open market allows participants to submit AI models, which are then subject to human evaluation and spot checks. This design encourages model diversity and creates incentives for contributors and external observers to identify and correct issues quickly.

Buterin explained that prediction markets can be used to gather insights about future events, providing a more robust and decentralized method of AI integration in governance, rather than hardcoding a single model that could be exploited.

He emphasized that this approach reduces the risk of exploits and aligns incentives across participants.

A fair and democratic system that could revolutionize human collaboration and participation.

“This type of ‘institution design’ approach, where you create an open opportunity for people with LLMs from the outside to plug in, rather than hardcoding a single LLM yourself, is inherently more robust.”

Other cases

Yet, that is not the first time that the use of AI in facilitating scams has been reported.

A Reuters investigation revealed that AI tools, particularly ChatGPT, were misused in Southeast Asia to craft convincing messages targeting U.S. real estate agents and cryptocurrency investors. These AI-generated communications deceived victims into depositing money into fake investment accounts, showing the potential for AI to be weaponized in financial fraud schemes.

Implications

While many crypto users have explored AI for portfolio management or trading bots, Buterin’s warnings highlight the potential risks of deploying AI in governance without careful safeguards. Integrating AI into decision-making systems should involve human oversight, rigorous testing, and diverse model participation to mitigate security vulnerabilities and prevent manipulation.

Disclaimer: All materials on this site are for informational purposes only. None of the material should be interpreted as investment advice. Please note that despite the nature of much of the material created and hosted on this website, HODL FM is not a financial reference resource, and the opinions of authors and other contributors are their own and should not be taken as financial advice. If you require advice. HODL FM strongly recommends contacting a qualified industry professional.