Artificial Intelligence has somehow become an integral part of day-to-day human life in today’s world. Just a few years ago, the knowledge of AI was limited to only professionals, and its use was even more esoteric. But today, everybody knows about AI, and everyone uses it. Kids use it to complete homework that usually takes hours in mere seconds, lovers use it to write love letters to their beloved, and programmers use it even to write code they are paid to write themselves.

Regardless of your view, AI is a big win for the human race. It has made our lives and activities easier, faster, and more efficient. However, the question arises, “Does it make us safer?” AI is now loaded into everything, including smartphones and PCs, and with so much personal data potentially being shared, it is important to ask if these tools are built with our privacy in mind. And even more importantly, do these AI models like ChatGPT comply with data protection laws such as the General Data Regulation (GDPR)?

AI Privacy and The GDPR

AI Privacy is the term used to describe the practices and concerns around the ethical collection, storage, and usage of personal user information by AI systems. Simply put, AI Privacy laws provide regulations on how user data should be collected, stored, and even used, as well as how it should not.

The GDPR is considered the toughest security and privacy law in the world. It is a legal framework that sets guidelines for collecting and processing personal information from EU residents and citizens, regardless of where the company is based.

The GDPR aims to give consumers control over their data and hold companies, including AI companies, responsible for how they handle and treat this information.

How Does ChatGPT Ensure Data Protection?

ChatGPT is undoubtedly the most popular of the thousands of AI models we have. It is perhaps largely responsible for the big AI boom. But how exactly does the face of the AI world handle and protect your data? How is ChatGPT data security maintained?

The OpenAI product prides itself in many data protection techniques used to ensure ChatGPT privacy as well as GDPR ChatGPT compliance. But of all of them, three are particularly important, and they are;

The team at @OpenAI just fixed a critical account takeover vulnerability I reported few hours ago affecting #ChatGPT.

— Nagli (@galnagli) March 24, 2023

It was possible to takeover someone's account, view their chat history, and access their billing information without them ever realizing it.

Breakdown below 👇 pic.twitter.com/W4kXMNy6qI

Anonymization

Anonymization does not mean that your data will not be collected. ChatGPT will still use your conversation with the AI chatbot to improve its algorithm and responses. However, the system does this in a way that does not associate your identity with the data collected.

Encryption

Encryption is one of the most used data protection techniques in AI data privacy. ChatGPT encrypts any data collected from your interactions with it, ensuring no unauthorized parties can access it. This means that even if your data is intercepted somehow, it would be completely unreadable without the proper decryption key.

Transparency

Transparency is one of the data Protection regulations AI must comply with. It requires that companies ensure that users know how their data is being used. OpenAI, ChatGPT’s parent company, has been able to provide its users with clear privacy policies that explain how their data is collected, processed, stored, and used.

Key GDPR Compliance Challenges for AI chatbots

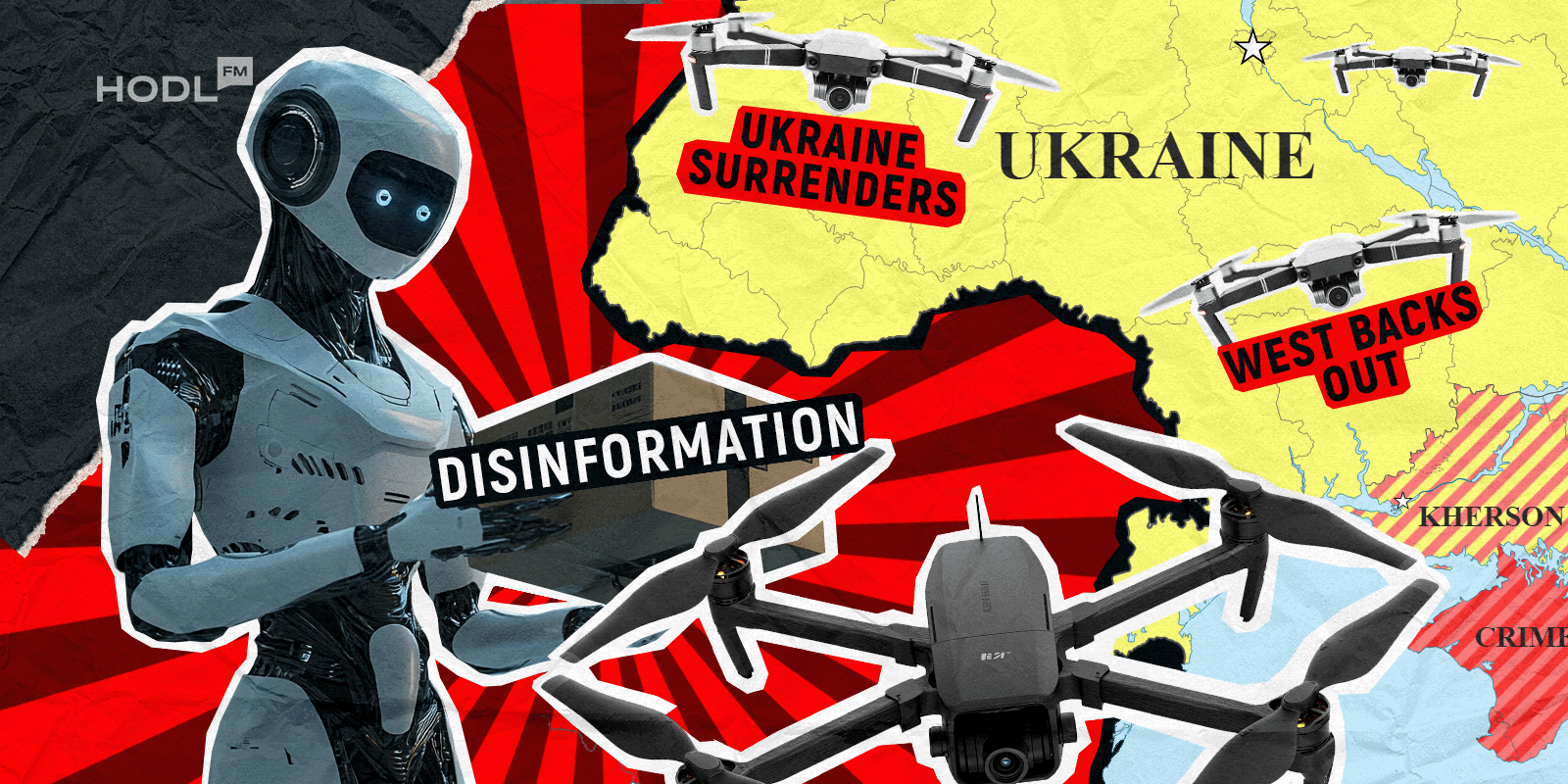

The GDPR has a well-developed framework for data privacy practices AI chatbots must comply with. However, as much as ChatGPT and other chatbots work to ensure GDPR compliance in AI with these guidelines, some challenges prevent complete AI GDPR compliance.

"If you haven't opt'd in to data sharing, then your conversation would not be used to train future models"

— ☣️ Mr. The Plague ☣️ (@DotNetRussell) October 25, 2024

So probably better to say "If you use chatgpt, opt out of data sharing" pic.twitter.com/z4n5LDofp0

Data Minimization

This principle reminds AI platforms that user data is not an all-you-can-eat buffet and requires that chatbots collect only the minimum amount of data necessary for its purpose. As clear-cut as this sounds, determining the minimum is not easy and is one of the big dilemmas in GDPR and AI communication.

Data Re-Identification

Anonymization is important, but it is not 100% guaranteed. It helps for sure, but there is a potential that user data can still be re-identified when combined with other information, especially when it comes to complex data sets that have an indirect identifier.

Transparency and Consent Management

While most Chatbots try to maintain transparency, many users do not take the time to read AI Chatbot privacy policies in detail. This leaves them with a deficit in understanding how their data is being used.

Security measures in ChatGPT and other AI platforms

With great power comes great responsibility and knowing the amount of power these AI platforms have, there have been many security measures put in place to ensure AI data privacy among other things. Some of these measures include;

Anonymization

Anonymization involves stripping away identifiable user information from data sets AI systems receive. It is usually done by altering, encrypting, or completely removing personal identifiers.

Limited Data Retention Times

AI systems are incredibly powerful but they are designed to have limited memories to minimize privacy risks. Most AI chatbot models have their memories limited to several hours.

Privacy By Design

Many AI models are built using the “Privacy By Design” principle from the very start. This principle helps ensure that privacy is considered at every stage of the development process.

AI Privacy Best Practices for GDPR Compliance

In building a model that meets Chatbot GDPR standards, some best practices can be implemented.

ChatGPT analyzes your conversations and tracks usage data to improve responses and train AI models. 🧠 #AIData #ChatPrivacy pic.twitter.com/rcgaDEpNBC

— HUDI (@humandataincome) October 23, 2024

Ease Of Consent Approach

Consent is one of the most important aspects of handling digital data. AI companies should provide avenues for users to give explicit consent to their data collection and usage policies. Users should also be able to opt in or out of their services anytime.

Frequent Security Tests

Securing user data is something one cannot be too careful with. Companies can strengthen their privacy techniques by ensuring timely and frequent security checks so bugs and security risks can be identified and fixed immediately.

It is often shocking how deeply AI has been rooted in daily human life. With how far it has come, it is safe to say that AI is not just going anywhere anytime soon; it has come to stay. But while not every part of it is perfect yet, especially the data security in AI, Privacy in artificial intelligence is a work in progress, and make no mistake, it is in progress.

AI and data protection will continually be developed side by side. As more advanced AI models are built and more legislation is passed, it is certain that eventually, we can have a much better, more privacy-focused AI chatbot data protection model that is very much legislation-compliant.

Disclaimer: All materials on this site are for informational purposes only. None of the material should be interpreted as investment advice. Please note that despite the nature of much of the material created and hosted on this website, HODL FM is not a financial reference resource and the opinions of authors and other contributors are their own and should not be taken as financial advice. If you require advice of this sort, HODL FM strongly recommends contacting a qualified industry professional.