Amazon is allowing companies to bring their own generative AI models onto its AI development platform. This move is part of a package of measures the cloud computing giant is rolling out to keep up with competitors in the AI space.

The company’s cloud computing division hasn’t caused quite the stir that its AI-focused competitors have, instead betting that companies want to use their AI models.

AWS’s Bedrock

As companies experiment with and employ generative AI for various tasks, many are crafting their AI by customizing a vendor’s model or tweaking open-source models based on their own data.

Amazon Web Services announced that “tens of thousands” of enterprises are using Bedrock, its platform for developing AI applications. Offering companies the ability to add “do-it-yourself” models to Bedrock facilitates collaboration between corporate developers and data specialists, said Swami Sivasubramanian, AWS’s vice president of AI and data.

Overall, Amazon is playing catch-up with its tech rivals in the AI race, though it’s trying to bolster its position through new offerings on AWS and its retail operations. AWS lacks a defining AI partnership like Microsoft’s with OpenAI or Copilot, Microsoft’s AI-powered generative assistant for its business software. In November, AWS unveiled Amazon Q, an AI-powered chatbot for businesses and developers offering Titan models, but they’re not as well-known as Google’s chatbot and Gemini models.

So far, AWS has positioned itself as a neutral technology provider for AI, offering a wide range of AI models through Bedrock — from proprietary Anthropic models to open-source ones like Meta Platforms’ new Llama 3. According to Sivasubramanian, his model evaluation tool, which became fully available on Tuesday, will reduce the time enterprises would spend testing and analyzing different models.

Advancements in AI Models: Microsoft’s Phi-3

Three months after Bedrock became publicly available in September last year, most AWS customers were utilizing more than one model to develop AI applications, as reported by Amazon. Microsoft and Google also allow customers to use AI models from other companies, as well as open-source models from Meta and Parisian startup Mistral AI.

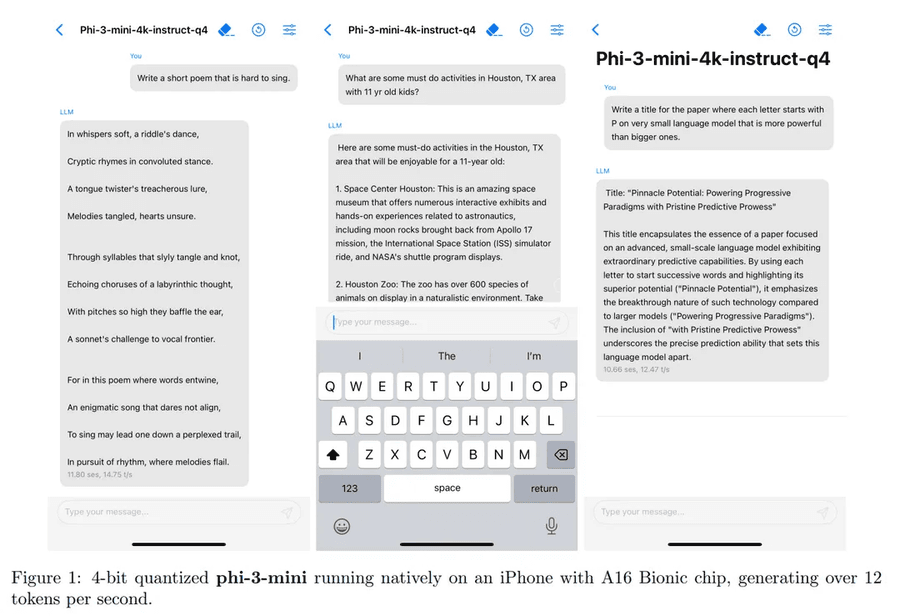

Microsoft announced the release of “the most functional and cost-effective small language models (SLM) available,” claiming that Phi-3 — the third iteration of the Phi family of small language models (SLM) — outperforms models of comparable size and even some larger ones.

According to the company, Phi-3 comes in various versions, the smallest of which is Phi-3 Mini, a model with 3.8 billion parameters trained on 3.3 trillion tokens. Despite its relatively small size — the Llama-3 corpus weighs over 15 trillion token data — Phi-3 Mini can still process 128 thousand context tokens. This makes it comparable to GPT-4 and surpasses Llama-3 and Mistral Large in token capacity.

One of the most significant advantages of Phi-3 Mini is its ability to run on a regular smartphone. Microsoft tested the model on an iPhone 14, and it ran smoothly, generating 14 tokens per second.

In other words, giants of AI like Llama-3 on Meta.ai and Mistral Large could stumble after a long conversation or hint long before this lightweight model encounters any difficulties.

More Info:

- Latest Google Study Says Users May Emotionally Connect with AI Assistants

- Elon Musk’s Grok AI is the Weakest Chatbot, Meta’s Llama Stands Strong

However, companies prefer to use AI services from the cloud provider they already work with. According to some IT directors, generative AI is easier to apply in cloud platforms where corporate data is already stored. This trend favors AWS, the world’s largest cloud provider, as when companies use AI services, they spend more on the cloud.

Disclaimer: All materials on this site are for informational purposes only. None of the material should be interpreted as investment advice. Please note that despite the nature of much of the material created and hosted on this website, HODL FM is not a financial reference resource and the opinions of authors and other contributors are their own and should not be taken as financial advice. If you require advice of this sort, HODL FM strongly recommends contacting a qualified industry professional.